NVMe SSD provides very fast storage access, its performance surpasses SATA SSDs or traditional HDDs significantly. As the SSD technology moves on, a single NVMe SSD can be overkill for most computing server. However, sharing the resource among multiple servers often limits SSD’s performance as the networking creates I/O bottleneck. As a result, NVMe SR-IOV was introduced to solve this issue. It is more difficult to solve multi-server interconnection bottleneck, but we could leverage multiple VMs to better utilize the bandwidth and throughput that a powerful NVMe SSD offers all from one bare-metal server. Before going on, let’s first see how SR-IOV works and how it improves performance?

How NVMe SR-IOV works

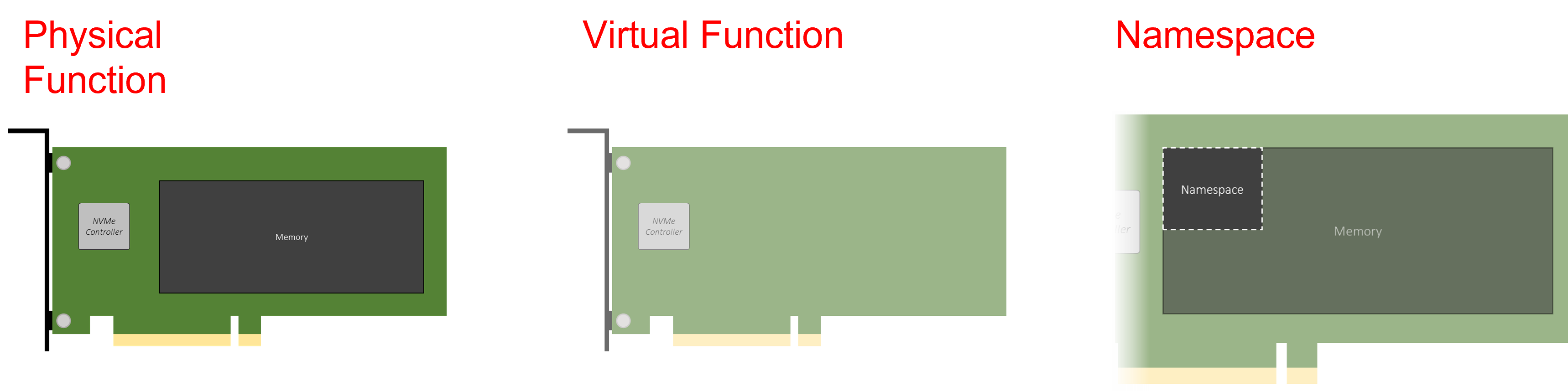

There three most important terms in SR-IOV, the physical function (PF), the virtual function (VF), and the namespace (NS).

- A PF refers to the physical device, or the NVMe SSD itself. Everything in SR-IOV such as creating VF and namespace starts from a PF.

- A VF is extended from a PF, multiple VFs share the device’s underlying hardware and PCI Express link.

- A namespace is the isolation of logical blocks addressable by the host software.

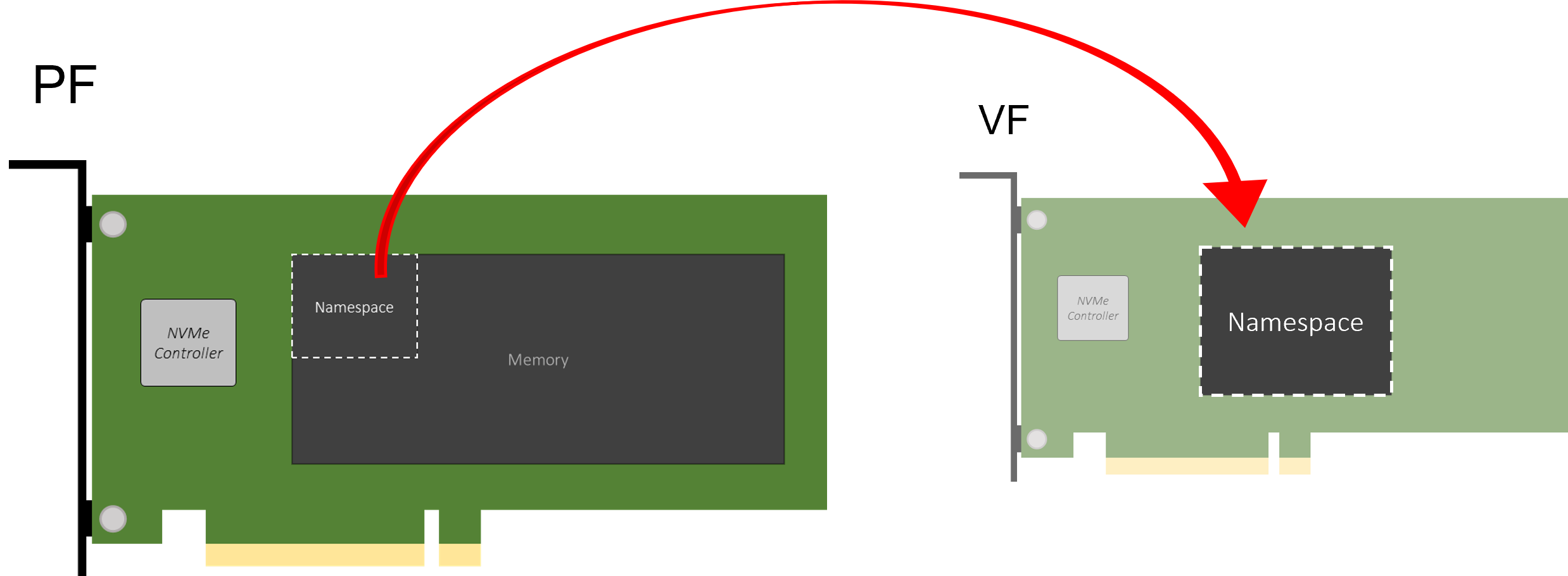

Users can devine the sharing property of a namespace when creating it (discuss later in the section). For simplicity, you can think of a namespace as a partition of the SSD that holds certain storage volume. So, it is not hard to figure out that namespace is the actual storage resource, but a VF is needed to handle the I/O between virtual machine and the namespace. Attach namespace to VF so the VM can access storage capacity:

In practice, a namespace is attached to a VF, then the VF is provisioned to a host. A host could have multiple VFs provisioned to it, but there is a limitation on VF, in which one VF can only be provisioned to one host. Namespace is different on the other hand. Users could define the sharing property of a namespace. A “private” namespace means that the namespace can only be attached to one VF. In contrast, a “Shared” namespace can be attached to multiple VFs simultaneously. Thus, if you’d like the data stored in a namespace to be accessed by different hosts, you need a shared namespace and attach it to the VFs that are provisioned to the hosts that need to access the data, vice versa. In addition, namespace can be attached to VF even when the VF is already provisioned to a host. In other word, users can add more storage capacity to a VF whenever needed.

Given the capability of VF and namespace, users can accurately allocate the storage capacity according to individual application needs and scale up capacity painlessly along the way.

How it benefits performance

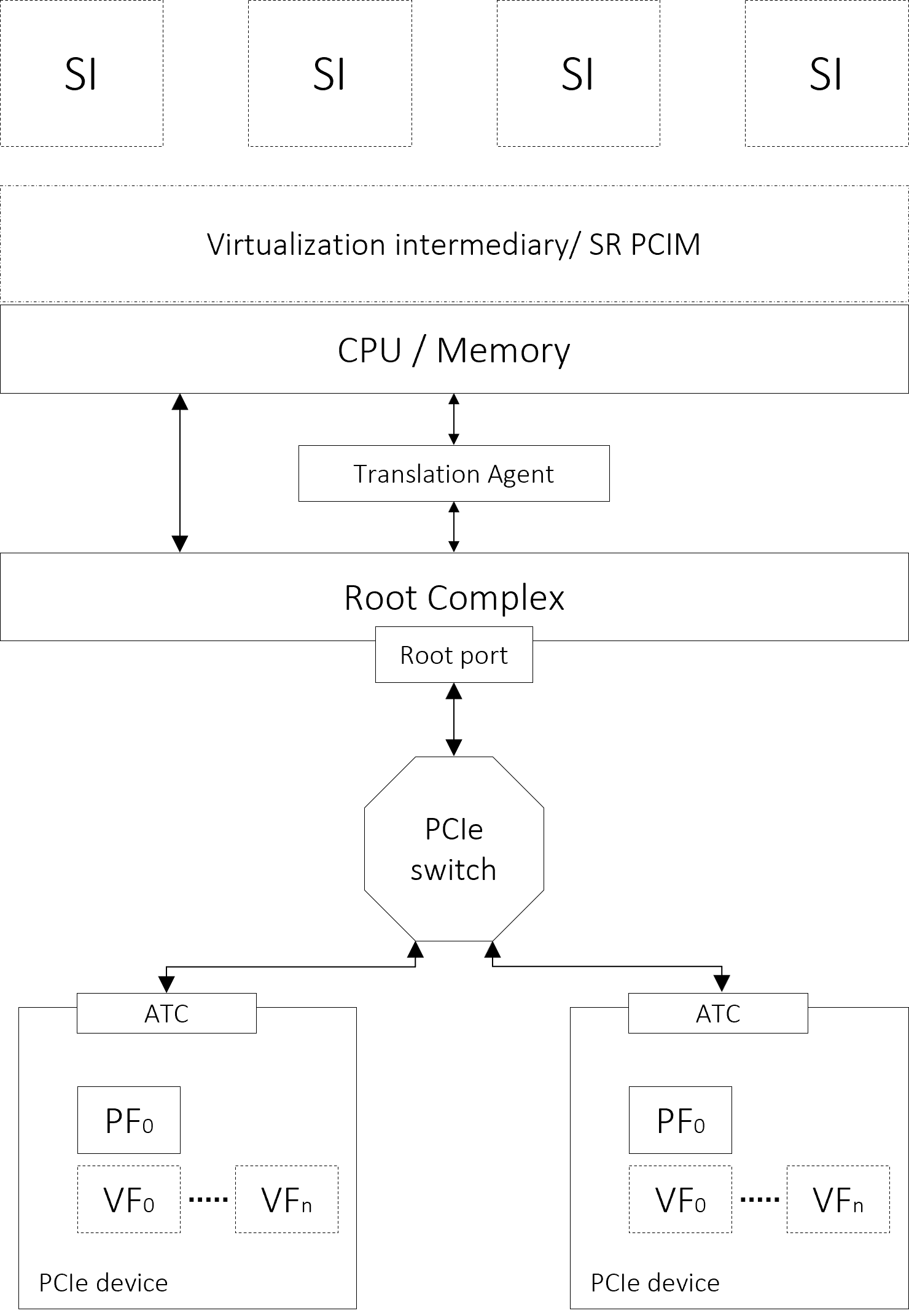

SR-IOV architecture:

Virtual intermediary:

A virtual intermediary (VI) is a software layer that manages all the interactions between the virtual machines and physical computing/storage resources, its often a hypervisor or a VMM. In classical VM application, the VI handles all the interruptions, mapping which data should be sent to which virtual machine. As a result, the IO performance of the system declines as it consumes much CPU resource to handle all the interruptions, and it gets worse as the number of VM increases.

Bypassing hypervisor or VMM for performance improvement

A direct way to solve this performance issue is to lower the CPU overhead. That is, instead of the VI, let someone else handle the IO interruptions and leave the CPU resource for more meaningful tasks. This is where SR-IOV comes into the place. When a NVMe SSD VF is successfully provisioned to a virtual machine, it introduces a direct IO path between the virtual machine and the physical device, to be more exact, the physical resources that are associated to the namespace in which attached to the VF. This direct IO path bypasses the hypervisor, allowing the VM to handle IO interruptions on its own. Now that the VM gains direct access to the NVMe SSD just like a physical server would access a physical SSD device installed to it, the virtual system can utilize all the bandwidth that the physical SSD provides without being affected by any software layers in between, therefore the performance is near bare metal. Virtualization seems great, the SSD can be fully utilized, and the performance would not be affected by network latency by this approach if the data does not have to travel over to any other compute devices. The issue behind this approach is that the NVMe SSD is still not flexible, it is only accessible within the server that it is installed, and the CPU requirement becomes very high when you need to create many virtual machines to match the SSD performance. What if we can disaggregate the SSD from the server, and provision its virtual functions to different computing machine?

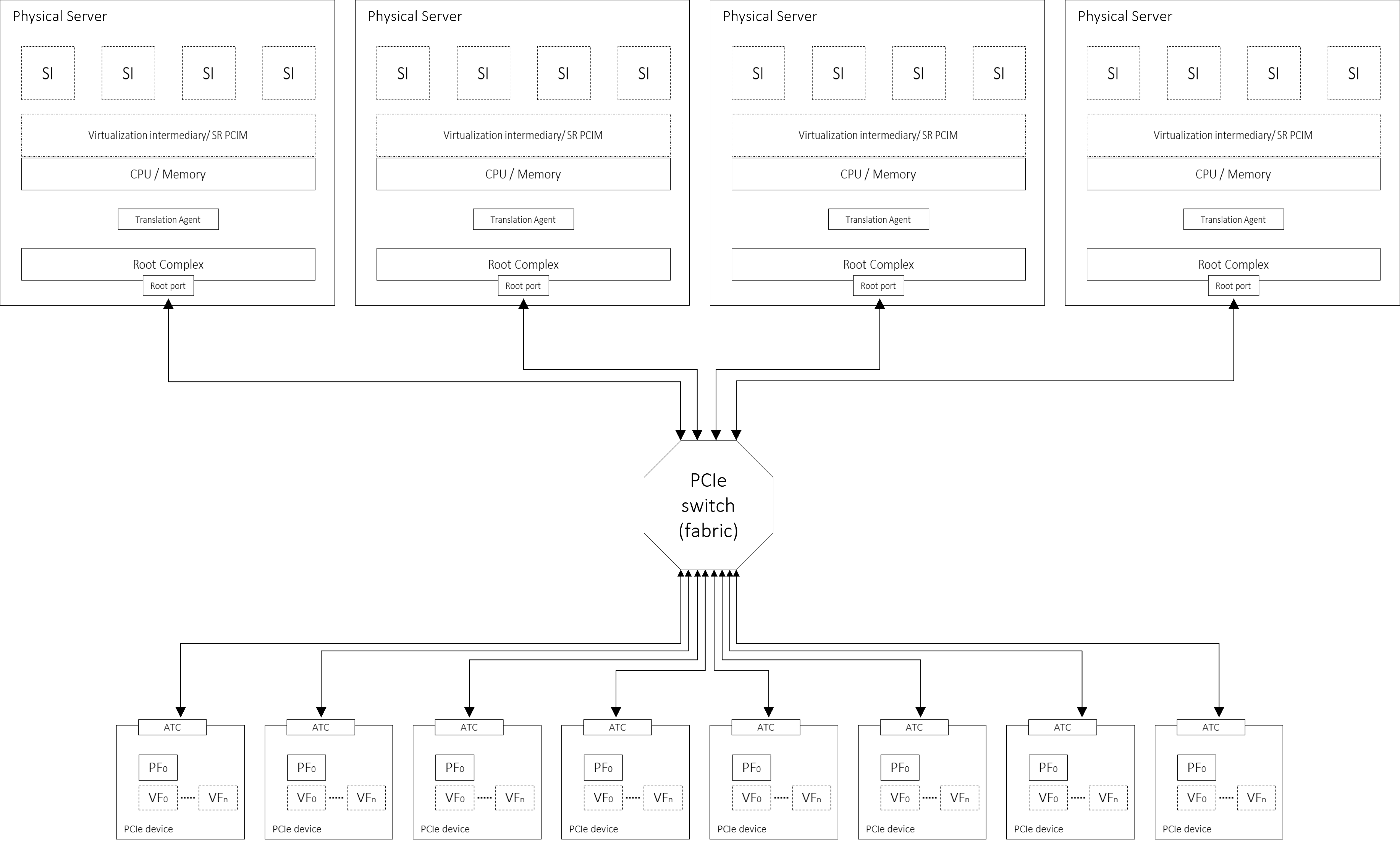

Multi-host architecture and composable SSD virtual functions

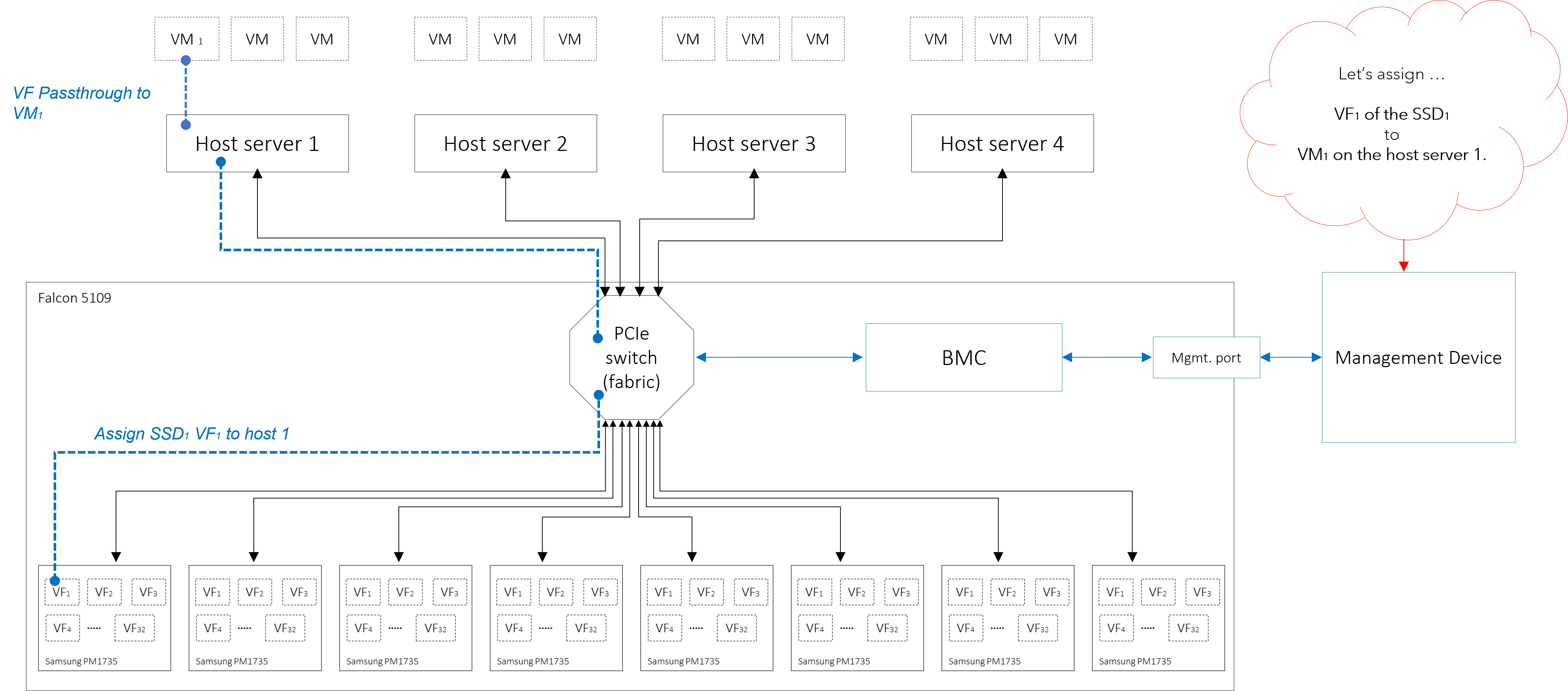

The concept is straight forward, instead of installing NVMe SSDs in every server and virtualize it for the VMs, why don’t we disaggregate the SSDs, pool them, and virtualize them externally? Then we could simply provision the VFs to any compute node that needs the storage resource. It would be much easier to manage all the SSDs in one place than having to visit every individual server, especially in datacenters where you have hundreds of servers. Not to mention that it helps to reduce hardware cost, since we are minimizing waste of SSD resources, the number of SSDs required is expected to reduce with this approach. Multi-host SR-IOV:

Being able to virtualize SSDs externally and provision VFs to different hosts sounds like a cool idea, however, it would not be as efficient without the ability to move storage resources around quickly in a computing cluster. Afterall, the storage and performance requirements are not always static, it varies according to the on-going workloads.

Taken AI development workloads as an example, the amount of storage needed in every training stage would be different, the only thing that stays the same is the need of performance. Thus, being able to adjust storage resource while maintaining the performance is critical, and MR-IOV would fit perfectly in such scenario. Film production is another example. We all knew that NVMe SSDs benefits video editing by improves rendering experience with its great I/O performance. And when there are many video editors, being able to share SSD resources would reduce hardware cost significantly.

Nonetheless, making massive SSD SR-IOV available for multi-host isn’t that easy, it must meet several requirements for practical usage, including a processor that is able to handle all signals and endpoint mappings, the SSD device itself must be multi-root aware (MRA) and support virtualization, and the ability to manage SSD virtual functions and namespaces (create, delete, attach…etc.). In this context, switching technology plays an important role in achieving multi-host share of NVMe SR-IOV. Another issue would be networking. The latency of interconnect should be as low as possible to accompany the performance of multiple SSDS while securing the data integrity.

Falcon 5208 multi-host NVMe SR-IOV solution

Falcon 5208 is the first storage solution introduced by H3 Platform and is one of the first multi-host composable SSD solution dedicated to virtualization applications in the industry. By integrating hardware and software, Falcon 5208 possesses storage performance, agility, and flexibility simultaneously. Let’s see how Falcon 5208 fulfills the requirements in different dimension.

Falcon 5208 solution architecture:

Performance

The Falcon 5208 comes with 8 piece of Samsung PM1735 PCIe SSDs (3.2 TB or 6.4 TB of choice). We selected Samsung PM1735 PCIe add-in-card SSD to be the storage device of this integrated solution as it has one of the best NVMe controller that supports SR-IOV. You could visit Samsung official site to view the detailed specification of PM1735. The 8 SSDs as a system could reach 12 million IOPS. Like all our composable GPU products, the Falcon 5208 solution adopts PCIe Gen 4 fabric which fulfills high-speed interconnect requirements and enables multi-host connectivity at the same time. The external PCIe cables connect the Falcon 5208 NVMe chassis and the host machines directly, providing a high bandwidth, low latency channel for data transmission. Also, NVMe protocol allows the data to travel via PCIe all the way to the endpoints, eliminating the need for extra encoding/decoding process.

Flexibility and agility

Falcon 5109 is equipped with our latest management software that incorporates all necessary features for SSD management. You can create virtual functions, create namespaces with desired storage capacity, attaching namespaces to VFs, dynamically provision VFs to host machines, and monitor the performance of every single SSDs in the system all from one graphical user interface. Even an inexperienced system managers can complete all configurations and resource provision with simple clicks with Falcon 5109.

Management

The management interface is unified in Falcon 5208 architecture, because all SSD devices are under Falcon 5208 system, we are able to reduce the management complexity simply with a BMC that controls the built-in PCIe switch in which dictates all behaviors in the NVMe system. The chassis operation commands are sent from a stand-along management device, making Falcon 5109 system independent to the host machines where your VMs and applications are running. Users can operate the Falcon 5208 system, create VFs and namespaces, and provision on the go without interrupting on-going tasks.

SR-IOV assures data security

There are in fact many virtualization softwares that aims to lower the software overhead for better performance in virtual environments. These softwares often achieve the goal by reducing the number of software layers between VM and devices. For example, a software may . Yes, the performance becomes great, but an underlying issue is that it is difficult to guarantee performance with only virtualization softwares while maintaining high-level of data security, because introducing more software layers creates more overhead which then affects the performance. In contrast, SR-IOV is more of a device-driven solution. Recall that in SR-IOV, a physical function is virtualized into VFs, and the storage capacity is divided into namespaces. Without giving shared property to the namespaces, the NVMe controller would not allow different system images (VMs) to access the same namespace. As a result, SR-IOV prevents anyone from breaking through this barrier, without the need of any other software aid. Data security is very complicated and is critical to enterprise and data center storage applications, we will have a blog discussing the security issue in detail later on.

Here’s a quick summary for Falcon 5208:

- Multi-host connectivity

- High-performance storage

- VF and Namespace management (create, attach, remove…etc.)

- Resource (virtual functions) dynamic provisioning.

- Graphical user interface that simplifies storage management schema.

- Real-time device health monitor.

- Applicable to commodity servers.

High-performance SSDs are becoming more and more popular, and the SSD technology has been advancing rapidly, SSDs with higher capacity, IOPS, and throughputs are introduced to the market every couple month. Therefore, before the other computing components can catch up with the performance, perhaps multi-host is the only way to match the performance of one single powerful SSD. We are likely to see more and more multi-host solution comping up, especially for HPC, datacenters, and the clouds. Falcon 5208 is just our first step, and you can expect more multi-host virtualization solutions from H3 Platform in near future.